A. Introduction

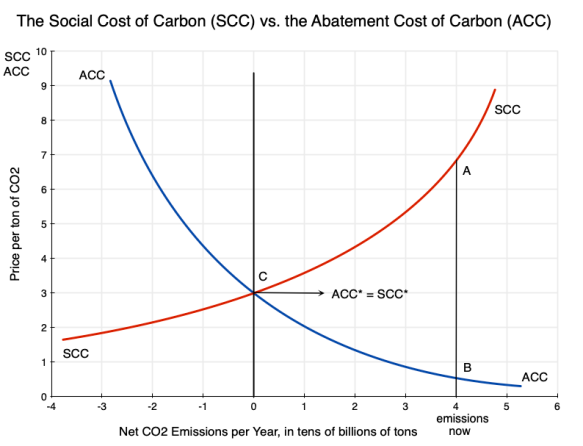

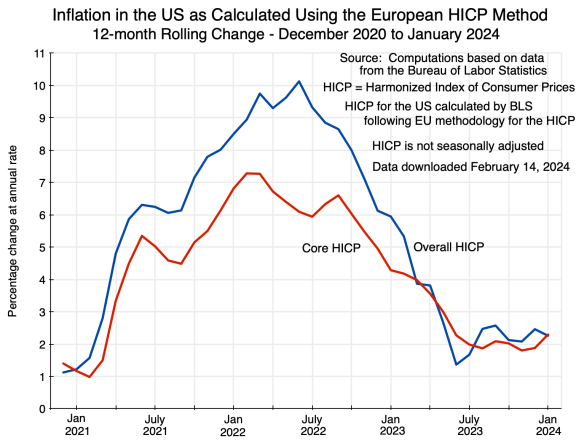

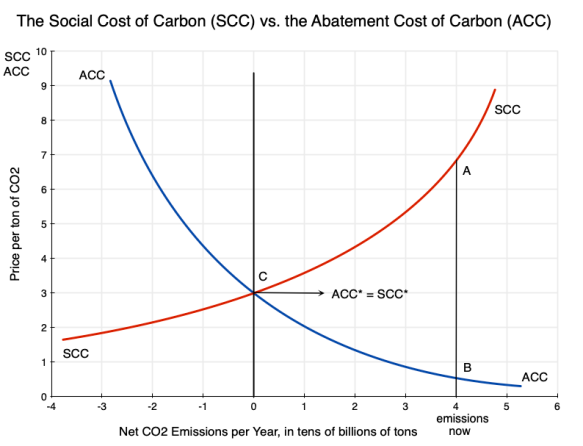

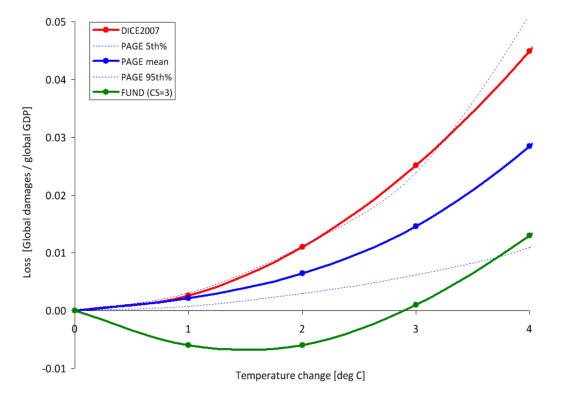

An earlier post on this blog discussed the basic economics of carbon pricing, and the distinction between the Social Cost of Carbon (SCC – the cost to society from the damage done when an extra unit of carbon dioxide, CO2, is released into the atmosphere) and the Abatement Cost of Carbon (ACC – what it costs to reduce the emissions of CO2 by a unit). Using the diagram from that post that is copied above, the post discussed some of the implications one can draw simply from an understanding that there are such curves, their basic shapes, and their relationships to each other.

There remains the question of what the actual values of the SCC and ACC might be under present conditions, and how one might obtain estimates of those values. That is not easy and can get complicated, but knowing what such values might be (even approximately) is of use as decisions are made on how best to address CO2 emissions.

This post will look at how estimates have been obtained for the SCC. A subsequent post will look at how the ACC can be estimated. The process to obtain SCC estimates is particularly complex, with significant limitations. While a number of researchers and groups have arrived at estimates for the SCC, I will focus on the specific approach followed by an interagency group established by the US federal government soon after a court ruling in 2008 that some such process needed to be established. The estimates they obtained (which were then updated several times as the process was refined) have been perhaps the most widely followed and influential estimates made of the SCC, with those estimates used in the development of federal rules and regulations on a wide range of issues where CO2 emissions might be affected.

While that interagency group had the resources to make use of best practices developed elsewhere, with a more in-depth assessment than others were able to do, there are still major limitations. In the sections below, we will first go through the methodology they followed and then discuss some of its inherent limitations. The post will then review the key issue of what discount rates should be used to discount back to the present the damages that will be ongoing for many years from a unit of CO2 that is released today (with those impacts lasting for centuries). The particular SCC estimates obtained will be highly sensitive to the discount rates used, and there has been a great deal of debate among economists on what those rates should be.

The limitations of the process are significant. But the nature of the process followed also implies that the SCC estimates arrived at will be biased too low, for a number of reasons. The easiest to see is that the estimated damages from the higher global temperatures are from a limited list: those where they could come up with some figure (possibly close to a guess) on damages following from some given temperature increase. The value of non-marketed goods (such as the existence of viable ecosystems – what environmentalists call existence values) were sometimes counted but often not, but when included the valuation could only be basically a guess. Other damages (including, obviously, those we are not able to predict now) were ignored and implicitly treated as if they were zero.

More fundamentally, the methodologies do not (and cannot, due not just to a lack of data but also inherent uncertainties) take adequately into account the possibility of catastrophic climate events resulting from a high but certainly possible increase in temperatures from the CO2 we continue to release into the air. Feedback loops are important, but are not well understood. If these possibilities are taken into account, the resulting SCC would be extremely high. We just do not know how high. In the limit, under plausible functional forms for the underlying relationships (in particular that there are “fat tails” in the distributions due to feedback effects), the SCC could in theory approach infinity. Nothing can be infinite in the real world, of course, but recognition of these factors (which in the standard approaches are ignored) implies that the SCC when properly evaluated will be very high.

We are then left with the not terribly satisfactory answer that we do not, and indeed cannot, really know what the SCC is. All we can know is that it will be very high at our current pace of releasing CO2 into the air. That can still be important, since, when coupled with evidence that the ACC is relatively low, it is telling us (in terms of the diagram at the top of this post) that the SCC will be far above the ACC. Thus society will gain (and gain tremendously) from actions to cut back on CO2 emissions.

I argue that this also implies that for issues such as federal rule-making, the SCC should be treated in a fashion similar to how monetary figures are assigned to basic parameters such as the “value of a statistical life” (VSL). The VSL is in principle the value assigned to an average person’s life being saved. It is needed in, for example, determining how much should be spent to improve safety in road designs, in the consideration of public health measures, in environmental regulations, and so on. No one can really say what the value of a life might be, but we need some such figure in order to make decisions on expenditures or regulatory rules that will affect health and safety. And whatever that value is, one should want it to be used consistently across different regulatory and other decisions, rather than some value for some decision and then a value that is twice as high (or twice as low) for some other decision.

The SCC should best be seen as similar to a VSL figure. We should not take too seriously the specific number arrived at (all we really know is that the SCC is high), but rather agree on some such figure to allow for consistency in decision-making for federal rules and other actions that will affect CO2 emissions.

This turned out to be a much longer post than I had anticipated when I began. And I have ended up in a different place than I had anticipated when I began. But I have learned a good deal in working my way through how SCC estimates are arrived at, and in examining how different economists have come to different conclusions on some of the key issues. Hopefully, readers will find this also of interest.

The issue is certainly urgent. The post on this blog prior to this one looked at the remarkable series of extreme climate events of just the past few months of this summer. Records have been broken on global average surface temperatures, on ocean water temperatures, and on Antarctic sea ice extent (reaching record lows this season). More worryingly, those records were broken not by small margins, but by large jumps over what the records had been before. Those record temperatures were then accompanied by other extreme events, including numerous floods, local high temperature records being broken, especially violent storms, extensive wildfires in Canada that have burned so far this year well more than double the area burned in any previous entire year (with consequent dangerous smoke levels affecting at different times much of the US as well as Canada itself), and other climate-related disasters. Climate events are happening, and sometimes with impacts that had not earlier been anticipated. There is much that we do not yet know about what may result from a warmer planet, and that uncertainty itself should be a major cause of concern.

B. How the SCC is Estimated

This section will discuss how the SCC is in practice estimated. While some of the limitations on such estimates will be clear as we go through the methodology, the section following this one will look more systematically at some of those limitations.

There have been numerous academic studies that have sought to determine values for the SCC, and the IPCC (the Intergovernmental Panel on Climate Change) and various country governments have come up with estimates as well. There can be some differences in the various approaches taken, but they are fundamentally similar. I will focus here on the specific process used by the US government, where an Interagency Working Group (IWG) was established in 2009 during the Obama administration to arrive at an estimate. The IWG was convened by the Office of Management and Budget with the Council of Economic Advisers (both in the White House), and was made up of representatives of twelve different government cabinet-level departments and agencies.

The IWG was established in response to a federal court order issued in 2008 (the last year of the Bush administration). Federal law requires that economic and social impacts be taken into account as federal regulations are determined as well as in whether federal funds can be used to support various projects. The case before the court in 2008 was on how federal regulations on automotive fuel economy standards are set. The social cost of the resulting CO2 emissions will matter for this, but by not taking that into account up until that point, the federal government was implicitly pricing it at zero. The court said that while there may indeed be a good deal of uncertainty in what cost should be set for that, the cost was certainly not zero. The federal government was therefore ordered by the court to come up with its best estimate for what this should be (i.e. for what the SCC should be) and apply it. The IWG was organized in response to that court order.

The first set of estimates was issued in February 2010, with a Technical Support Document explaining in some detail the methodology used. While the specifics have evolved over time, the basic approach has remained largely the same and my purpose here is to describe the essential features of how the SCC has been estimated. Much of what I summarize here comes from this 2010 document. There is also a good summary of the methodology followed, prepared by three of the key authors who developed the IWG approach, in a March 2011 NBER Working Paper. I will not go into all the details on the approach used by the IWG (see those documents if one wants more) but rather will cover only the essential elements.

The IWG updated its estimates for the SCC in May 2013, in July 2015, and again in August 2016 (when they also issued in a separate document estimates using a similar methodology for the social cost of two other important greenhouse gases: the Social Cost of Methane and the Social Cost of Nitrous Oxide). These were all during the Obama administration. The Trump administration then either had to continue to use the August 2016 figures or issue its own new estimates. It of course chose the latter, but followed a process that was basically a farce to come up with figures that were so low as to be basically meaningless (as will be discussed below). The Biden administration then issued a new set of figures in February 2021 – soon after taking office – but those “new” figures were, as they explained, simply the 2016 figures (for methane and nitrous oxide as well as for CO2) updated to be expressed in terms of 2020 prices (the prior figures had all been expressed in terms of 2007 prices – the GDP deflator was used for the adjustment). A more thorough re-estimation of these SCC values has since been underway, but finalization has been held up in part due to court challenges.

The Social Cost of Carbon is an estimate, in today’s terms, of what the total damages will be when a unit of CO2 is released into the atmosphere. The physical units normally used are metric tons (1,000 kilograms, or about 2,205 pounds). The damages will start in the year the CO2 is emitted and will last for hundreds of years, as CO2 remains in the atmosphere for hundreds of years. Unlike some other pollutants (such as methane), CO2 does not break down in the atmosphere into simpler chemical compounds, but rather is only slowly removed from the atmosphere due to other processes, such as absorption into the oceans or into new plant growth. The damages (due to the hotter planet) in each of the future years from the ton of CO2 emitted today will then be discounted back to the present based on some discount rate. How that works will be discussed below, along with a review of the debate on what the appropriate discount rate should be. The discount rate used is important, as the estimates for the SCC will be highly sensitive to the rate used.

It is important also to be clear that while there may well be (and indeed normally will be) additional emissions of CO2 from the given source in future years, the SCC counts only the cost of a ton emitted in the given year. That is, the SCC is the cost of the damages resulting from a single ton of CO2 emitted once, at some given point in time.

In very summary form, the process of estimating the SCC will require a sequence of steps, starting with an estimation of how much CO2 concentrations in the atmosphere will rise per ton of CO2 released into the air. It will then require estimates of what effect those higher CO2 concentrations will have on global surface temperatures in each future year; the year by year damages (in economic terms) that will be caused by the hotter climate; and then the sum of that series of damages discounted back to the present to provide a figure for what the total cost to society will be when a unit of CO2 is released into the air. That sum is the SCC. The discussion that follows will elaborate on each of those steps, where an integrated model (called an Integrated Assessment Model, or IAM) is normally used to link all those steps together. The IWG in fact used three different IAMs, each run with values for various parameters that the IWG provided in order to ensure uniform assumptions. This provided a range of ultimate estimates. It then took a simple average of the values obtained across the three models for the final SCC values it published. The discussion below will elaborate on all of this.

a) Step One: The impact on CO2 concentrations from a release of a unit of CO2, and the impact of those higher concentrations on global temperatures

The first step is to obtain an estimate of the impact on the concentration of CO2 in the atmosphere (now and in all future years) from an additional ton of CO2 being emitted in the given initial year. This is straightforward, although some estimate will need to be made on the (very slow) pace at which the CO2 will ultimately be removed from the atmosphere in the centuries to come. While there are uncertainties here, this will probably be the least uncertain step in the entire process.

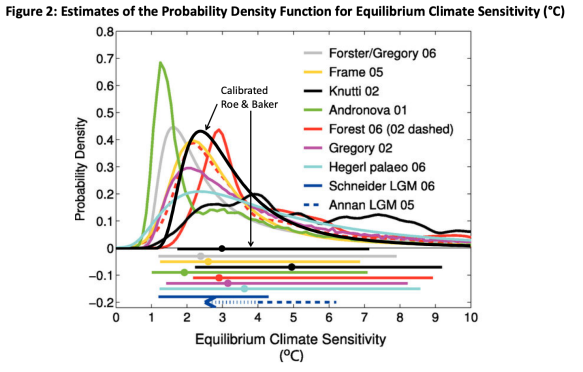

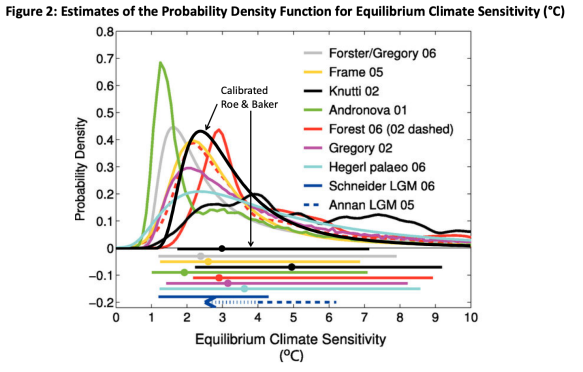

From the atmospheric concentrations of CO2 (and other greenhouse gases, based on some assumed scenario of what they will be), an estimate will then need to be made of the impact on global temperatures following from the higher concentration of CO2. A model will be required for this, where a key parameter is called the “equilibrium climate sensitivity”. This is defined as how far higher global temperatures would ultimately increase (over a 100 to 200-year time horizon) should the CO2 concentration in the atmosphere rise to double what it was in the pre-industrial era. Such a doubling would bring it to a concentration of roughly 550 ppm (parts per million).

There is a fair degree of consensus that the direct effect of a doubling of the CO2 concentration in the atmosphere would increase global average surface temperatures by about 1.2°C. However, there will then be feedback effects, and the extent and impact of those feedback effects are highly uncertain. This was modeled in the IWG work as a probability distribution for what the equilibrium climate sensitivity parameter might be, with a median and variation around that median based on a broad assessment made by the IPCC on what those values might be. Based on the IPCC work, the IWG assumed that the median value for the parameter was that global average surface temperatures would increase by 3.0°C over a period of 100 to 200 years should the CO2 concentration in the atmosphere rise to 550 ppm and then somehow kept there. They also assumed that the distribution of possible values for the parameter would follow what is called a Roe & Baker distribution (which will be discussed below), and that there would be a two-thirds probability that the increase would be between 2.0 °C and 4.5 °C over the pre-industrial norm.

The increase from the 1.2°C direct effect to the 3.0°C longer-term effect is due to feedback effects – which are, however, not well understood. Examples of such feedback effects are the increased absorption of sunlight by the Arctic Ocean – thus warming it – when more of the Arctic ice cover has melted (as the snow on the ice cover is white and reflects light to a much greater extent than dark waters); the release of methane (a highly potent greenhouse gas) as permafrost melts; the increased number of forest fires releasing CO2 as they burn, as forests dry out due to heat and drought as well as insect invasions such as from pine bark beetles; and other such effects.

Based on this assumed probability distribution for how high global temperatures will rise as a consequence of a higher concentration of CO2 in the atmosphere, the IWG ran what are called “Monte Carlo simulations” for what the resulting global temperatures might be. While the mean expected value was that there would be a 3.0°C rise in temperatures should the CO2 concentration in the atmosphere rise to 550 ppm, this is not known with certainty. There is variation in what it might be around that mean. In a Monte Carlo simulation, the model is repeatedly run (10,000 times in the work the IWG did), where for each run certain of the parameter values (in this case, the climate sensitivity parameter) are chosen according to the probability distribution assumed. The final estimate used by the IWG was then a simple average over the 10,000 runs.

b) Step Two: The damage from higher global temperatures on economic activity

Once one has an estimate of the impact on global temperatures one will need an estimate of the impact of those higher temperatures on global output. There will be effects both directly from the higher average temperatures (e.g. lower crop yields), but also from the increased frequency and/or severity of extreme weather events (such as storms, droughts in some places and floods in others, severe heat and cold snaps, and so on). A model will be needed for this, and will provide an estimate of the impact relative to some assumed base path for what output would otherwise be. The sensitivity of the economic impacts to those higher global temperatures is modeled through what are called “damage functions”. Those damage functions relate a given increase in global surface temperatures to some reduction in global consumption – as measured by the concepts in the GDP accounts and often expressed as a share of global GDP.

Estimating what those damage functions might be is far from straightforward. There will be huge uncertainties, as will be discussed in further detail below where the limitations of such estimates are reviewed. The problem is in part addressed by focussing on a limited number of sectors (such as agriculture, buildings and structures exposed to storms, human health effects, the loss of land due to sea level rise and from increased salination, and similar). However, in limiting which sectors are looked at, the possible impacts will not be exhaustive and hence will underestimate what the overall economic impacts might be. They also do not include non-economic impacts.

In addition, the impacts will be nonlinear, where the additional damage in going from, say, a 2 degree increase in global temperatures to a 3 degree increase will be greater than in going from a 1 degree increase to 2 degrees. The models in general try to incorporate this by allowing for nonlinear damage functions to be specified. But note that a consequence (both here and elsewhere in the overall models) is that the specific SCC found for a unit release of CO2 into the air will depend on the base scenario assumed. The base path matters, and any given SCC estimate can only be interpreted in terms of an extra unit of CO2 being released relative to that specified base path. The base scenario being assumed should always be clearly specified, but it not always is.

c) Step Three: Discount the year-by-year damage estimates back to the starting date. The SCC is the sum of that series.

The future year-by-year estimates of the economic value of the damages caused by the additional ton of CO2 emitted today need then to all be expressed in terms of today’s values. That is, they will all need to be discounted back to the base year of when the CO2 was emitted. More precisely, the models must all be run in a base scenario with the data and parameters as set for that scenario, and then run again in the same way but with one extra ton of CO2 emitted in the base year. The incremental damage in each year will then be the difference in each year between the damages in those two runs. Those incremental damages will then be discounted back to the base year.

The estimated value will be sensitive to the discount rate used for this calculation, and determining what that discount rate should be has been controversial. That issue will be discussed below. But for some given discount rate, the annual values of the damages from a unit of CO2 being released (over centuries) would all be discounted back to the present. The SCC is then the sum of that series.

d) The Models Used by the IWG, and the Resulting SCC Estimates

As should be clear from this brief description, modeling these impacts to arrive at a good estimate for the SCC is not easy. And as the IWG itself has repeatedly emphasized, there will be a huge degree of uncertainty. To partially address this (but only partially, and more to identify the variation that might arise), the IWG followed an approach where they worked out the estimates based on three different models of the impacts. They also specified five different base scenarios on the paths for CO2 emissions, global output (GDP), and population, with their final SCC estimates then taken to be an unweighted average over those five scenarios for each of the three IAM models. They also ran each of these five scenarios for each of the three IAM models for each of three different discount rates (although they then kept the estimates for each of the three discount rates separate).

The three IAM models used by the IWG were developed, respectively, by Professor William Nordhaus of Yale (DICE, for Dynamic Integrated Climate and Economy), by Professor Chris Hope of the University of Cambridge (PAGE, for Policy Analysis of the Greenhouse Effect), and by Professor Richard Tol of the University of Sussex and VU University Amsterdam (FUND, for Climate Framework for Uncertainty, Negotiation and Distribution). Although similar in the basic approach taken, they produce a range of outcomes.

i) The DICE Model of Nordhaus

Professor Nordhaus has developed and refined variants of his DICE model since the early 1990s, with related earlier work dating from the 1970s. His work was pioneering in many respects, and for this he received a Nobel Prize in Economics in 2018. But the prize was controversial. While the development of his DICE model was innovative in the level of detail that he incorporated, and brought attention to the need to address the impacts of greenhouse gas emissions and the resulting climate change seriously, his conclusion was that limiting CO2 emissions at that time was not urgent (even though he argued it eventually would be needed). Rather, he argued, an optimal approach would follow a ‘policy ramp’ with only modest rates of emissions reductions in the near term, followed by sharp reductions in the medium and long terms. A primary driver of this conclusion was the use by Nordhaus of a relatively high discount rate – an issue that, as noted before, will be discussed in more depth below.

ii) The PAGE Model of Hope

The PAGE model of Professor Hope dates from the 1990s, with several updates since then. The PAGE2002 version was used by Professor Nicholas Stern (with key parameters set by him, including the discount rate) in his 2006 report for the UK Treasury titled “The Economics of Climate Change” (commonly called the Stern Review). The Stern Review came to a very different conclusion than Professor Nordhaus, and argued that addressing climate change through emissions reductions was both necessary and urgent. Professor Nordhaus, in a 2007 review of the Stern Review, argued that the differences in their conclusions could all be attributed to Stern using a much lower discount rate than he did (which Nordhaus argued was too low). Again, this controversy on the proper discount rates to use will be discussed below. But note that the implications are important: One analyst (Nordhaus) concluded that the climate change was not urgent and that it would be better to start with only modest measures (if any) and then ramp up limits on CO2 emissions only slowly over time. The other (Stern) concluded that the issue was urgent and should be addressed immediately.

iii) The FUND Model of Tol

Professor Tol, the developer of the FUND model, is controversial as he has concluded that “The impact of climate change is relatively small.” But he has also added that “Although the impact of climate change may be small, it is real and it is negative.” And while he was a coordinating lead author for the IPCC Fifth Assessment Report, he withdrew from the team for the final summary report as he disagreed with the presentation, calling it alarmist. Unlike the other IAMs, the FUND model of Tol (with the scenarios as set by the IWG) calculated that the near-term impact of global warming resulting from CO2 emissions will not just be small but indeed a bit positive. This could follow, in the “damage” functions that Tol postulated, from health benefits to certain populations (those living in cold climates) from a warmer planet and from the “fertilization” effect on plant growth from higher concentrations of CO2 in the air.

These assumptions, leading to an overall net positive effect when CO2 concentrations are not yet much higher than they are now, are, however, controversial. And Tol admitted that he left out certain effects, such as the impacts of extreme weather events and biodiversity loss. In any case, the net damages ultimately turn negative even in Tol’s model.

While Tol’s work is controversial, the inclusion of the FUND model in the estimation of the SCC by the IWG shows that they deliberately included a range of possible outcomes in modeling the impacts of CO2 emissions on climate change. The Tol model served to indicate what a lower bound on the SCC might be.

iv) The Global Growth Scenarios and the Resulting SCC Estimates

While the IWG made use of the DICE, PAGE, and FUND models, it ran each of these models with a common set of key assumptions specifically on 1) the equilibrium climate sensitivity parameter (expressed as a probability distribution and discussed above); 2) each of five different scenarios on what baseline global growth would be; and 3) each of three different social discount rate assumptions. Thus while the IWG used three IAMs that others had created, the IWG’s estimates of the resulting SCC values will differ from what the creators of those models had themselves generated (as those creators of the IAMs have used their own set of assumptions for these different parameters and scenarios). Thus the resulting SCC estimates of the IWG will differ from the SCC estimates one might see in reports on the DICE, PAGE, and FUND models. The IWG used a common set of assumptions on these key inputs in order that the resulting SCC estimates (across the three IAM models) will depend only on differences in the modeled relationships, not on assumptions made on key inputs to those models.

The three IAM models were each run with five different baseline scenarios of what global CO2 emissions, GDP, and population might be year by year going forward. For these, the IWG used four models (with the names IMAGE, MERGE, Message, and MiniCam), from a group of models that had been assembled under the auspices of the Energy Modeling Forum of Stanford University. These models provided an internally consistent view of future global GDP, population, and CO2 emissions. Specifically, they used the “business-as-usual” scenarios of those four models.

The IWG then produced a fifth scenario (based on a simple average of runs from each of the four global models just referred to) where the concentration of CO2 in the atmosphere was kept from ever going above 550 parts per million (ppm). Recall from above that at 550 ppm the CO2 concentrations would be roughly double the concentration in the pre-industrial era. But one should note that there would still be CO2 being emitted in 2050 in these 550 ppm scenarios: The 550 ppm ceiling would be reached only on some date after 2050 in this scenario. These were therefore not net-zero scenarios in 2050, but ones with CO2 still being emitted in that year (although on a declining trajectory, and well below the emission levels of the “business as usual” scenarios).

Keep in mind also that a 550 ppm scenario is far from a desirable scenario in terms of the global warming impact. As discussed above, such a concentration of CO2 in the air would be roughly double the concentration in the pre-industrial era, and the ultimate effect (the “equilibrium climate sensitivity”) of a doubling in CO2 concentration would likely be a 3.0°C rise in global average surface temperatures over that in the pre-industrial period (although with a great deal of uncertainty around that value). This is well above the 2.0°C maximum increase in global surface temperatures agreed to in the 2015 Paris Climate Accords – where the stated goal was to remain “well below” a 2.0°C increase, and preferably to stay below a 1.5°C rise. (In the estimates of at least one official source – that of the Copernicus Climate Change Service of the EU – global average surface temperatures had already reached that 1.5°C benchmark in July and again in August 2023, over what they had averaged in those respective months in the pre-industrial, 1850 to 1900, period. For the year 2022 as a whole, global average surface temperatures were 1.2°C higher than in the pre-industrial period.) The Paris Accords have been signed by 197 states (more than the 195 UN members). And of the 197 signatories, 194 have ratified the accord. The three exceptions are Iran, Libya, and Yemen.

To determine the incremental damages due to the release of an additional ton of CO2 into the air in the base period, the IAM models were run with all the inputs (including the base path levels of CO2 emissions) of each of these five global scenarios, and then run again with everything the same except with some additional physical unit of CO2 emitted (some number of tons) in the base year. The incremental damages in each year would then be the difference in the damages between those two runs. Those incremental damages in each year (discounted back to the base year) would then be added up and expressed on a per ton of CO2 basis. That sum is the SCC.

Due to the debate on what the proper discount rate should be for these calculations, the IWG ran each of the three IAM models under each of the five global scenarios three sets of times: for discount rates of 5.0%, 3.0%, and 2.5%, respectively. Thus the IWG produced 15 model runs (for each of the 3 IAMs and 5 global scenarios) for each of the 3 discount rates, i.e. 45 model runs (and actually double this to get the incremental damages due to an extra ton of CO2 being emitted). For each of the three discount rates, they then took the SCC estimate to be the simple average of what was produced over the 15 model runs at that discount rate.

Actually, there were far more than 15 model runs for each of the three discount rates examined. As noted above, the “equilibrium climate sensitivity” parameter was specified not as a single – known and fixed – value, but rather as a probability distribution, where the parameter could span a wide range but with different probabilities on where it might be (high in the middle and then falling off as one approached each of the two extremes). The PAGE and FUND models also specified certain of the other relationships in their models as probability distributions. The IWG therefore ran each of the models via Monte Carlo simulations, as was earlier discussed, where for each of the five global scenarios and each of the three IAMs and each of the three discount rates, there were 10,000 model runs where the various parameters were selected in any given run based on randomized selections consistent with the specified probability distributions.

Through such Monte Carlo simulations, they could then determine what the distribution of possible SCC values might be – from the 1st percentile on the distribution at one extreme, through the 50th percentile (the median), to the 99th percentile at the other extreme, and everything in between. They could also work out the overall mean value across the 10,000 Monte Carlo simulations for each of the runs, where the mean values could (and typically did) differ substantially from the median, as the resulting probability distributions of the possible SCCs were not symmetric but rather significantly skewed to the right – with what are known as “fat tails”. The final SCC estimates for each of the three possible discount rates were then the simple means over the values obtained for the three IAM models, five global scenarios, and 10,000 Monte Carlo simulations (i.e. 150,000 for each, and in fact double this in order to obtain the incremental effect of an additional ton of CO2 being emitted).

The resulting SCC estimates could – and did – vary a lot. To illustrate, this table shows the calculated estimates for the SCC for the year 2010 (in 2007 dollars) from the 2010 initial report of the IWG:

The results are shown for each of three IAMs (DICE, PAGE, and FUND), each of the five global scenarios, and each of the three discount rates (5.0%, 3.0%, and 2.5%). The final column shows what the SCC would be at the 95th percentile of the distribution when a discount rate of 3.0% is used. Each of the values in the first three columns of the tables are the simple averages over the 10,000 Monte Carlo runs for each of the scenarios.

The SCCs for 2010 (in 2007$) are then the simple averages of the figures in this table for each of the three discount rates (i.e. the simple averages of each of the columns). As one can confirm by doing the arithmetic, the average at a discount rate of 5.0% is $4.7 per ton of CO2, at a discount rate of 3.0% it is $21.4, and at a discount rate of 2.5% it is $35.1.

The values of the SCC shown in the table obviously span a very broad range, and that range will not appear when the simple averages across the 15 cases (from three IAMs and five global scenarios) are the only figures people pay attention to. The IWG was clear on this, and repeatedly stressed the uncertainties, but the broad ranges in the underlying figures do not inspire confidence. A critic could pick and choose among these for a value supportive of whatever argument they wish to make. Under one model and one global scenario and one discount rate the SCC in 2010 is estimated to be a negative $2.7 per ton of CO2 (from the FUND model results, which postulated near-term net benefits from a warming planet). Under a different set of assumptions, the SCC estimate is as high as $65.5 per ton. And much of that variation remains even if one limits the comparison to a given discount rate.

The figures in the table above also illustrate the important point that the SCC estimate can only be defined and understood in terms of some scenario of what the base path of global GDP and population would otherwise be, and especially of what the path of CO2 (and other greenhouse gas) emissions would be. In scenarios where CO2 emissions are high (e.g. “business as usual” scenarios), then damages will be high and the benefit from reducing CO2 emissions by a unit will be high. That is, the SCC will be high. But in a scenario where steps are taken to reduce CO2 emissions, then the damages will be less and the resulting SCC estimate will be relatively low. This is seen in the SCC estimates for the “550 average” scenarios in the table, which are lower (for each of the IAMs and each of the discount rates) than in the four “business as usual” scenarios.

The paradox in this, and apparent contradiction, is that to reduce CO2 emissions (so as to get to the 550 ppm scenario) one will need a higher – not a lower – cost assigned to CO2 emissions. But the contradiction is apparent only. If one were able to get on to a path of lower CO2 emissions over time, then the damages from those CO2 emissions would be smaller and the SCC in such a scenario would be lower. The confusion arises because the SCC is not a measure of what it might cost to reduce CO2 emissions, but rather a measure of the damage done (in some specific scenario) from such emissions.

Finally, such SCC estimates were produced in the same manner for CO2 that would be released in 2010, or in 2020, or in 2030, or in 2040, or in 2050. The SCC of each would be based on emissions in that specified individual year, based on the resulting damages going forward from that year. The values of the SCC in individual intervening years were then simply interpolated between the decennial figures. Keep in mind that these SCC values will be the SCC of one ton of CO2 – released once, not each year – in that specified year. The resulting SCC estimates are not the same over time but rather increase as world population and GDP are higher over time (at least in the scenarios assumed for these models). With larger absolute levels of global GDP, damages in absolute terms for some estimated percentage of global GDP will be higher over time. In addition, there will be the nonlinear impacts resulting from the increase over time of CO2 concentrations in the atmosphere, leading to greater damages and hence higher SCC values.

The resulting figures for the SCC in the estimates issued in February 2021, were then:

Social Cost of CO2, 2020 to 2050 (in 2020 dollars per metric ton of CO2)

| Year/Discount Rate |

5.0%

|

3.0%

|

2.5%

|

3.0%

|

|

Average |

Average |

Average |

95th Percentile |

| 2020 |

$14 |

$51 |

$76 |

$152 |

| 2030 |

$19 |

$62 |

$89 |

$187 |

| 2040 |

$25 |

$73 |

$103 |

$225 |

| 2050 |

$32 |

$85 |

$116 |

$260 |

Of these figures, the IWG recommended that the SCC values at the 3.0% discount rate should normally be the ones used as the base case by analysts (e.g. $51 per ton for CO2 emitted in 2020). The other SCC values might then be of use in sensitivity analyses to determine the extent to which any conclusion drawn might depend on the discount rate assumed. As noted before, these February 2021 estimates are the same as those issued in 2016, but adjusted to the 2020 general price level (where the earlier ones had all been in 2007 prices).

For those who have followed the discussion to this point, it should be clear that the process was far from a simple one. And it should be clear that there will be major uncertainties, as the IWG has repeatedly emphasized. Some of the limitations will be discussed next.

C. Limitations of the SCC Estimates

While the methodology followed by the IWG in arriving at its estimates for the SCC might well reflect best practices in the field, there are nonetheless major limitations. Among those to note:

a) To start, and most obviously, the resulting SCC estimates still vary widely despite often using simple averages to narrow the ranges. The figures for 2020 in the table above, for example, range from $14 per ton of CO2 (at a discount rate of 5%) to $76 (at a discount rate of 2.5%) – more than five times higher. The IWG recommendation is to use the middle $51 per ton figure (at the 3% discount rate) for most purposes, but the wide range at different discount rates will lead many not to place a good deal of confidence in any of the figures.

Furthermore, estimates of the SCC have been made by others that differ significantly from these. In the US government itself, the Environmental Protection Agency issued for public comment in September 2022 a set of SCC estimates that are substantially higher than the February 2021 IWG figures. This was done outside of the IWG process – in which the EPA participates – and I suspect that either some bureaucratic politicking is going on or that this is a trial balloon to see the reaction. For the year 2020, the EPA document has an SCC estimate of $120 per ton of CO2 at a discount rate of 2.5% – well above the IWG figure of $76 at a 2.5% discount rate. The EPA also issued figures of $190 at a discount rate of 2.0% and $340 at a discount rate of 1.5%. Various academics and other entities have also produced estimates of the SCC, that differ even more.

It can also be difficult to explain to the general public that an SCC estimate can only be defined in terms of some specified scenario of how global greenhouse gas emissions will evolve in the future. Reported SCC values may differ from each other not necessarily because of different underlying models and parameter assumptions, but because they are assuming different scenarios for what the base path of CO2 emissions might be.

These differences in SCC estimates should not be surprising given the assumptions that are necessary to estimate the SCC, as well as the difficulties. But the resulting reported variation undermines the confidence one may have in the specific values of any such estimates. The concept of the SCC is clear. But it may not be possible to work out a good estimate in practice.

b) As a simple example of the uncertainties, it was noted above that the IAM models postulate a damage function for the relationship between higher global temperatures and the resulting damage to global output. It is difficult to know what these might be, quantitatively. The more detailed IAM models will break this down by broad sectors (e.g. agriculture, impacts on health, damage to structures from storms, etc.), but these are still difficult to assess quantitatively. Consider, for example, making an estimate of the damage done last year – or any past year – as a consequence of higher global temperatures. While we know precisely what those temperatures were in those past years (so there is no uncertainty on that issue), estimates will vary widely of the damage done as a consequence of the higher temperatures. Different experts – even excluding those who are climate skeptics – can arrive at widely different figures. While we know precisely what happened in the past, working out all the impacts of warming temperatures will still be difficult. But the IAM models need to estimate what the damages would be in future years – and indeed in distant future years – when there is of course far greater uncertainty.

Note also that the issue here is not one of whether any specific extreme weather event can be attributed with any certainty to higher global temperatures. While the science of “extreme event attribution” has developed over the last two decades, it is commonly misunderstood. It is never possible to say with certainty whether any particular extreme storm happened due to climate change and would not have happened had there been no climate change. Nor is that the right question. Weather is variable, and there have been extreme weather events before the planet became as warm as it is now. The impact of climate change is rather that it makes such extreme weather events more likely.

Consider, for example, the impact of smoking on lung cancer. We know (despite the denial by tobacco companies for many years) that smoking increases the likelihood that one will get lung cancer. This is now accepted. Yet we cannot say with certainty that any particular smoker who ends up with lung cancer got it because he or she smoked. Lung cancers existed before people were smoking. What we do know is that smoking greatly increases the likelihood a smoker will get cancer, and we can make statistical estimates of what the increase in that likelihood is.

Similarly, we cannot say for certain that any particular extreme weather event was due to climate change. But this does not justify conservative news outlets proclaiming that since we cannot prove this for an individual storm, that therefore we have no evidence on the impact of climate change. Rather, just like for smoking and lung cancer, we can develop estimates of the increased likelihood of this happening, and from this work out estimates of the resulting economic damages. If, for example, climate change doubles the likelihood of some type of extreme weather event, then we can estimate that half of the overall damages observed by such weather events in some year can be statistically attributed to climate change.

This is still difficult to do well. There is not much data, and one cannot run experiments on this in a lab. Thus estimates of the damages resulting from climate change – even in past years – vary widely. But one needs such estimates of damages resulting from climate change for the SCC estimate – and not simply for past years (with all we know on what happened in the past) but rather in statistical terms for future years.

c) It would be useful at this point also to distinguish between what economists call “risk” and what they call “uncertainty”. This originated in the work of Frank Knight, with the publication of his book “Risk, Uncertainty and Profit” in 1921. And while not commonly recognized as often, John Maynard Keynes introduced a similar distinction in his “A Treatise on Probability”, also published in 1921.

As Knight defined the terms, “risk” refers to situations where numerical probabilities may be known mathematically (as in results when one rolls dice) or can be determined empirically from data (from repeated such events in the past). “Uncertainty”, in contrast, refers to situations where numerical probabilities are not known, and possibly cannot be known. In situations of risk, one can work out the relative likelihood that some event might occur. In situations of uncertainty, one cannot.

In general language, we often use these terms interchangeably and it does not really matter. But for certain situations, the distinction can be important.

Climate change is one. There is a good deal of uncertainty about how the future climate will develop and what the resulting impacts might be. And while certain effects are predictable as a consequence of greenhouse gas emissions (and indeed we are already witnessing this), there are likely to be impacts that we have not anticipated, That is, for both the types of events that we have already experienced and even more so for the types of events that may happen in the future as the planet grows warmer, there is “uncertainty” (in the sense defined by Knight) on how common these might be and on what the resulting damages might be. There is simply a good deal of uncertainty on the overall magnitude of the economic and other impacts to expect should there be, say, a 3.0 °C increase in global temperatures. However, the IAMs have to treat these not as “uncertainties” in the sense of Knight, but rather as “risks”. That is, the IAMs assume a specific numerical relationship between some given temperature rise and the resulting impact on GDP.

The IAMs need to do this as otherwise they would not be able to arrive at an estimate of the SCC. However, one should recognize that a leap is being made here from uncertainties that cannot be known with any assurance to treatment as if they are risks that can be specified numerically in their models.

d) Thus the IAMs specify certain damage functions to calculate what the damages to the planet will be for a given increase in global temperatures. The difficulty, however, is that there is no data that can be used to assess this directly. The world has not seen temperatures at 3.0°C above what they were in the pre-industrial era (at least not when modern humans populated the world). The economists producing the IAMs consulted various sector experts on this, but one needs to recognize that no one really knows. It can only be a guess.

But the IAMs need something in order to come up with an estimate of the SCC. So after reviewing what has been published and consulting with various experts, those developing the IAMs arrived at some figure on what the damages might be at some given increase in global temperatures. They expressed these in “consumption-equivalent” terms, which would include both the direct impact on production plus some valuation given (in some of the models using a “willingness-to-pay” approach) to impacts on non-marketed goods (such as adverse impacts on ecosystems – environmentalists call these existence values).

And while it is not fully clear whether and how the IAM models accounted for this, in principle the damages should also include damages to the stock of capital resulting from a higher likelihood of severe storm events. For example, if Miami were to be destroyed by a particularly severe hurricane made more likely due to a warming planet, the damages that year should include the total capital value of the lost buildings and other infrastructure, not just the impact on Miami’s production of goods and services (i.e. its GDP) that year. GDP does not take into account changes in the stock of capital. (And while Miami was used here as an example, the calculation of the damages would in fact be for all cities in the world and expressed probabilistically for the increase in the likelihood of such events happening somewhere on the planet in any given year in a warmer world.)

Based on such damage estimates (or guesses), they then calibrated their IAM models to fit the assumed figures. While I have expressed these in aggregate terms, in the models such estimates were broken down in different ways by major sectors or regions. For example, the DICE model (in the vintage used by the IWG for its 2010 estimates) looked at the impacts across eight different sectors (impacts such as on agriculture, on human health, on coastal areas from sea level rise, on human settlements, and so on). The PAGE model broke down the relationships into eight different regions of the world but with only three broad sectors. And the FUND model broke the impacts into eight economic sectors in 16 different regions of the world. FUND also determined the damages not just on the level of the future temperatures in any given year, but also on the rate of change in the average temperatures prior to that year.

The impacts were then added up across the sectors and regions. However, for the purposes of the discussion here, we will only look at what the three IAMs postulated would be the overall damages from a given temperature rise.

The IAM developers calibrated their damage functions to match some assumed level of damages (as a share of GDP) at a given increase in global temperatures. Damages at an increase of 3.0°C may have been commonly used as an anchor, and I will assume that here, but it could have been at some other specific figure (possibly 2.0°C or 2.5°C). They then specified some mathematical relationship between a given rise in temperatures and the resulting damages, and calibrated that relationship so that it would go through the single data point they had (i.e. the damages at 3.0°C, or whatever anchor they used). This is a bit of over-simplification (as they did this separately by sector and possibly by region of the world, and then aggregated), but it captures the essence of the approach followed.

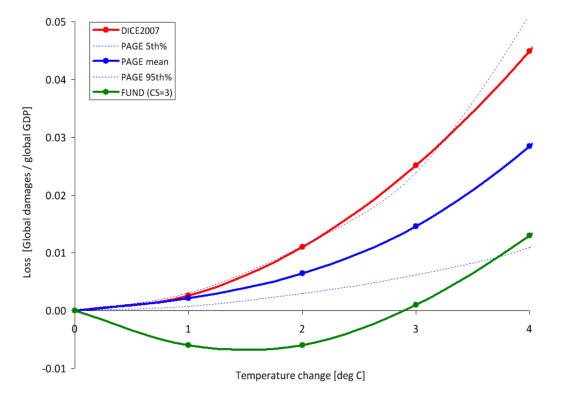

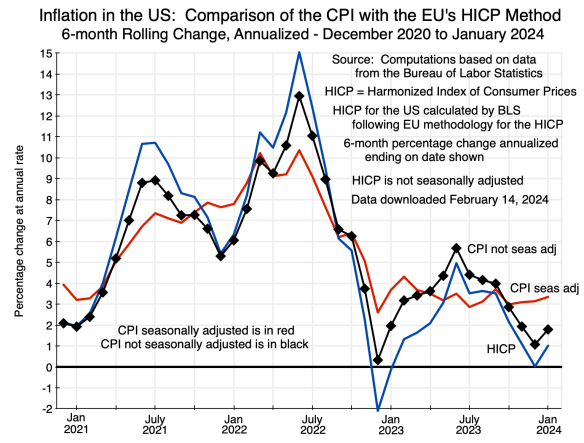

The IWG, in its original 2010 Technical Support Document, provided this chart for the resulting overall damages postulated in the IAM models (as a share of GDP) for temperatures up to 4.0 °C above those in the pre-industrial era, where for the purposes here the scenarios used correspond to the default assumptions of each model:

The curves in red, blue, and green are, respectively, for the DICE, PAGE, and FUND models (in the vintages used for the 2010 IWG report). And while perhaps difficult to see, the dotted blue lines show what the PAGE model estimated would be the 5th and 95th percentile limits around its mean estimate. It is obvious that these damage functions differ greatly. As the IWG noted: “These differences underscore the need for a thorough review of damage functions”.

The relationships themselves are smooth curves, but that is by construction. Given the lack of hard data, the IAMs simply assumed there would be some such smooth relationship between changes in temperatures and the resulting damages as a share of GDP. In the DICE and PAGE models these were simple quadratic functions constrained so that there would be zero damages for a zero change in temperatures. (The FUND relationship was a bit different to allow for the postulated positive benefits – i.e. negative losses – at the low increases in temperatures. It appears still to be a quadratic, but shifted from the origin.)

A quadratic function constrained to pass through the origin and then rise steadily from there requires only one parameter: Damages as a share of GDP will be equal to that parameter times the increase in global temperatures squared. For the DICE model, one can easily calculate that the parameter will equal 0.278. Reading from the chart (the IWG report did not provide a table with the specific values), the DICE model has that at a 3.0°C increase in temperatures (shown on the horizontal axis), the global damages will equal about 2.5% of GDP (shown on the vertical axis). Thus one can calculate the single parameter from 0.278 = 2.5 / (3.0 x 3.0). And one can confirm that this is correct (i.e. that a simple quadratic fits well) by examining what the predicted damages would be at, say, a 2.0°C temperature. It should equal 0.278 x (2.0×2.0), which equals damages of about 1.1% of GDP. Looking at the chart, this does indeed appear to be the damages according to DICE at a 2.0°C increase in global temperatures.

One can do this similarly for PAGE. At a 3.0°C rise in temperatures, the predicted damages are about 1.4% of GDP. The single parameter can be calculated from this to be 0.156. The predicted damages at a 2.0°C increase in temperatures would then be about 0.6°C, which is what one sees in the chart.

So what will the damages be at, say, a 2.0°C rise in temperatures? The DICE model estimates there will be a loss of about 1.1% of the then GDP; the PAGE model puts it at a loss of 0.6% of GDP; and the FUND model puts it at a gain of 0.6% of GDP. This is a wide range, and given our experience thus far with a more modest increase in global temperatures, all look to be low. In the face of such uncertainty, the IWG simply weighted each of the three models equally and calculated the SCC (for any given discount rate) as the simple average of the resulting estimates across the three models. But this is not a terribly satisfactory way to address such uncertainty, especially across three model estimates that vary so widely.

If anything, the estimates are probably all biased downward. That is, they likely underestimate the damages. Most obviously, they leave out (and hence treat as if the damages would be zero) those climate change impacts where they are unable to come up with any damage estimates. They will also of course leave out damages from climate change impacts that at this point we cannot yet identify. We do not know what we do not know. But even for impacts that have already been experienced at a more modest rise in global temperatures (such as more severe storms, flooding in some regions and drought in others, wildfires, extremely high heat waves impacting both crops and human health, and more) – impacts that will certainly get worse at a higher increase in global temperatures – the estimated impact of just 1.1% of GDP or less when temperatures reach 2.0°C over the pre-industrial average appears to be far too modest.

e) The damage functions postulate that the damages in any given year can be treated as a “shock” to what GDP would have been in that year, where that GDP is assumed to have followed some predefined base path to reach that point. In the next year, the base path is then exactly the same, and the damages due to climate change are again treated as a small shock to that predefined level of future GDP. That is, the models are structured so that their base path of GDP levels will be the same regardless of what happens due to climate change, and damages are taken as a series of annual shocks to that base path.

[Side note: The DICE model is slightly different, but in practice that difference is small. It treats the shock to GDP as affecting not only consumption but also savings, and through this also what investment will be. GDP in the next year will then depend on what the resulting stock of capital will be, using a simple production function (a Cobb-Douglas). But this effect will be small. If (in simple terms), consumption is, say, 80% of GDP and savings is 20%, and there is a 2% of GDP shock to what GDP would have been due to climate change with this split proportionally between consumption and savings, then savings will fall by 2% to 19.8% of GDP. The resulting addition to capital in the next period will be reduced, but not by much. The path will be close to what it would have been had this indirect effect been ignored.]

One would expect, however, that climate change will affect the path that the world economy will follow and not simply create a series of shocks to an unchanged base path. And due to compounding, the impact of even a small change to that base path can end up being large and much more significant than a series of shocks to some given path. For example, suppose that the base path growth is assumed to be 3.0% per year, but that due to climate change, this is reduced slightly to, say, 2.9% per year. After 50 years, that slight reduction in the rate of growth would lead GDP to be 4.7% lower than had it followed the base path, and after 100 years it would be 9.3% lower. Such a change in the path – even with just this illustrative very small change from 3.0% to 2.9% – will likely be of far greater importance than impacts estimated via a damage function constructed to include only some series of annual shocks to what GDP would be on a fixed base path.

It is impossible to say what the impact on global growth might be as a consequence of climate change. But it is likely to be far greater than the reduction in growth used in this simple example, which assumed a change of just 0.1% per year.

f) The damage functions also specify some simple global total for what the damages might be. The distribution of those damages across various groups or even countries is not taken into account, and hence neither do the SCC estimates. Rather, the SCC (the Social Cost of Carbon) treats the damages as some total for society as a whole.

Yet different groups will be affected differently, and massively so. Office workers in rich countries who go from air-conditioned homes to air-conditioned offices (in air-conditioned cars) may not be affected by as much as others (other than higher electricity bills). But farmers in poor countries may be dramatically affected by drought, floods, heat waves, salt-water encroachment on their land, and other possible effects.

This should not be seen as negating the SCC as a concept. It is what it is. That is, the SCC is a measure of the total impact, not the distribution of it. But one should not forget that even if the overall total impact might appear to be modest, various groups might still be severely affected. And those groups are likely in particular to be the poorer residents of this world.

g) It is not clear that the best way to address uncertainty on future global prospects (the combination of CO2 emissions, GDP, and population) is by combining – as the IWG did – four business-as-usual scenarios with one where some limits (but not very strong limits) are assumed to be placed on CO2 emissions. These are different. Rather, they could have estimated the SCC based on the business-as-usual scenarios (using an average) and then shown separately the SCC based on scenarios where CO2 emissions are controlled in various specified ways.

The IWG might not have done this as the resulting different sets of SCC estimates would likely have been even more difficult to explain to the public. The ultimate bureaucratic purpose of the exercise was also to arrive at some estimate for the SCC that could be used as federal rules and regulations were determined. For this they would need to arrive at just one set of estimates for the SCC. Presumably this would need to be based on a “most likely” scenario of some sort. But it is not clear to me that combining four “business-as-usual” scenarios with one where there are some measures to reduce carbon emissions would provide this.

The fundamental point remains that any given SCC estimate can only be defined in terms of some specified scenario on the future path of CO2 (and other greenhouse gas) emissions as well as the path of future GDP and population. There will also be at least implicit assumptions being made on future technologies and other factors. Clarity on the scenarios assumed is needed in order to understand what the SCC values mean and how any specific set of such estimates may relate to others.

h) There is also a tremendous amount of uncertainty in how strong the feedback effects will be. The IAM models attempted to incorporate this by assigning a probability distribution to the “equilibrium climate sensitivity” parameter, but there is essentially no data – only theories – on what that distribution might be.

Uncertainty on how strong such feedback effects might be is central to one of the critiques of the SCC concept. But this is best addressed as part of a review of the at times heated debate on what discount rate should be used. That issue will be addressed next.

D. Discount Rates

CO2 emitted now will remain in the atmosphere for centuries. Thus it will cause damage from the resulting higher global temperatures for centuries. The SCC reflects the value, discounted back to the date when the CO2 is released into the air, of that stream of damages from the date of release to many years from that date. Because of the effects of compounding over long periods of time, the resulting SCC estimate will be highly sensitive to the discount rate used. If the discount rate is relatively high, then the longer-term effects will be heavily discounted and will not matter as much (in present-day terms). The SCC will be relatively low. And if the discount rate is relatively low, the longer-term effects will not be discounted by as much, hence they will matter more, and hence the SCC will be relatively high.

This was seen in the SCC estimates of the IWG. As shown in the table at the end of Section B above, for CO2 that would be emitted in 2020 the IWG estimates that the SCC would be just $14 per ton of CO2 if a discount rate of 5.0% is used but $76 per ton if a discount rate of 2.5% is used – more than five times higher. And while a 2.5% rate might not appear to be all that much different from a 3.0% rate, the SCC is $51 at a 3.0% rate. The $76 per ton at 2.5% is almost 50% higher. And all of these estimates are for the exact same series of year-by-year damages resulting from the CO2 emission: All that differed was the discount rate used to discount back to the base year the stream of year-by-year damages.

Different economists have had different views on what the appropriate discount rate should be, and this became a central point of contention. But it mattered not so much because the resulting SCC estimates differed. They did – and differed significantly – and that was an obvious consequence. But the importance of the issue stemmed rather from the implication for policy. As was briefly discussed above, Professor William Nordhaus (an advocate for a relatively high discount rate) came to the conclusion that the optimal policy to be followed would be one where not much should be done directly to address CO2 emissions in the early years. Rather, Nordhaus concluded, society should only become aggressive in reducing CO2 emissions in subsequent decades. The way he put it in his 2008 book (titled A Question of Balance – Weighing the Options on Global Warming Policies), pp. 165-166:

“One of the major findings in the economics of climate change has been that efficient or ‘optimal’ policies to slow climate change involve modest rates of emission reductions in the near term, followed by sharp reductions in the medium and long terms. We might call this the ‘climate-policy ramp’, in which policies to slow global warming increasingly tighten or ramp up over time.”

But while Nordhaus presented this conclusion as a “finding” of the economics of climate change, others would strongly disagree. In particular, the debate became heated following the release in 2006 of the Stern Review, prepared for the UK Treasury. As was briefly noted above, Professor Stern (now Lord Nicholas Stern) came to a very different conclusion and argued strongly that action to reduce CO2 and other greenhouse gas emissions was urgent as well as necessary. It needed to be addressed now, not largely postponed to some date in the future.

Stern used a relatively low discount rate. Nordhaus, in a review article published in the Journal of Economic Literature (commonly abbreviated as JEL) in September 2007, argued that one can fully account for the difference in the conclusions reached between him and Stern simply from the differing assumptions on the discount rate. (He also argued he was right and Stern was wrong.) While there was in fact more to it than only the choice of the discount rate, discount rates were certainly an important factor.

There have now been dozens, if not hundreds, of academic papers published on the subject. And the issue in fact goes back further, to the early 1990s when Nordhaus had started work on his DICE models and William Cline (of the Peterson Institute for International Economics) published The Economics of Global Warming (in 1992). It was the same debate, with Nordhaus arguing for a relatively high discount rate and Cline arguing for a relatively low one.

I will not seek to review this literature here – it is vast – but rather focus on a few key articles in order to try to elucidate the key substantive issues on what the appropriate discount rate should be. I will also focus on the period of the few years following the issuance of the Stern Review in late 2006, where much of the work published was in reaction to it.

Nordhaus will be taken as the key representative of those arguing for a high discount rate. Nordhaus’s own book on the issues (A Question of Balance) was, as noted, published in 2008. While there was a technical core, Nordhaus also wrote portions summarizing his key points in a manner that should be accessible to a non-technical audience. He also had a full chapter dedicated to critiquing Stern. Stern and the approach followed in the Stern Review will be taken as the key representative of those arguing for a low discount rate.

In addition, the issue of uncertainty is central and critical. Two papers by Professor Martin Weitzman, then of Harvard, were especially insightful and profound. One was published in the JEL in the same September 2007 issue as the Nordhaus article cited above (and indeed with the exact same title: “A Review of The Stern Review of the Economics of Climate Change“). The other was published in The Review of Economics and Statistics in February 2009, and is titled “On Modeling and Interpreting the Economics of Catastrophic Climate Change”.

These papers will be discussed below in a section on Risk and Uncertainty. They were significant papers, with implications that economists recognized were profoundly important to the understanding of discount rates as they apply to climate change and indeed to the overall SCC concept itself. But while economists recognized their importance, they have basically been ignored in the general discussion on climate change and the role of discount rates – at least in what I have seen. They are not easy papers to go through, and non-economists (and indeed many economists) will find the math difficult.

The findings of the Weitzman papers are important. First, he shows that one should take into account risk (in the sense of Knight) and recognize that there are risks involved in the returns one might expect from investments in the general economy and in investments to reduce greenhouse gas emissions. The returns on each will vary depending on how things develop in the world – which we cannot know with certainty – but the returns on investments to reduce CO2 emissions will likely not vary in the same direction as returns on investments in the general economy. Rather, in situations where there is major damage to the general economy due to climate change, the returns to investments that would have been made to reduce CO2 emissions would be especially high. Recognizing this, the appropriate discount rate on investments to reduce CO2 emissions should be, as we will discuss below, relatively low. Indeed, they will be close to (or even below) the risk-free rate of return (commonly taken to be in the range of zero to 1%). This was addressed in Weitzman’s 2007 paper.

Second and more fundamentally, when one takes into account genuine uncertainty (in the sense of Knight) in the distribution of possible outcomes due to feedback and other effects (thus leading to what are called “fat tails”), one needs to recognize a possibly small, but still non-zero and mathematically significant chance of major or even catastrophic consequences if climate change is not addressed. The properly estimated SCC would then be extremely high, and the discount rate itself is almost beside the point. The key more practical conclusion was that with such feedback effects and uncertainties, any estimates of the SCC will not be robust: They will depend on what can only be arbitrary assumptions on how to handle those uncertainties, and any SCC estimate will be highly sensitive to the particular assumptions made. But the feedback effects and uncertainties point the SCC in the direction of something very high. This was covered by Weitzman in his 2009 paper.

The Weitzman papers were difficult to work through. In part for this reason, it is useful also to consider a paper published in 2010 authored by a group of economists at the University of Chicago. The paper builds on the contributions of Weitzman but also explains the basic points of Weitzman from a different perspective. The paper is still mathematical but complements the Weitzman papers well. The authors are Professors Gary Becker, Kevin Murphy, and Robert Topel, all then at the University of Chicago. Gary Becker has long been a prominent member of the faculty of economics at Chicago and has won a Nobel Prize in Economics. This is significant, as some might assume Weitzman (at Harvard) was not taking seriously enough a market-based approach (where the assumption of Nordhaus and others was that the discount rate should reflect the high rate of return one could obtain in the equity markets). The Chicago School of Economists is well known for its faith in markets, and the fact that Becker would co-author an article that fully backs up Weitzman and his findings is significant.

The paper of Becker and his co-authors is titled “On the Economics of Climate Policy”, and was published in the B.E Journal of Economic Analysis & Policy. But it also has not received much attention (at least from what I have seen), possibly because the journal is a rather obscure one.

The sub-sections below will discuss, in order, Nordhaus and the arguments for a relatively high discount rate; Stern and the arguments for a relatively low discount rate; and the impact of risk and uncertainty.

a) Nordhaus, and the arguments for a relatively high discount rate

Nordhaus, together with others who argued the discount rate should be relatively high, argue that the discount rate should be viewed as a measure of the rate at which an investment could grow in the general markets. In A Question of Balance (pp. 169-170) he noted that in his usage, the terms “real return on capital”, “real interest rate”, “opportunity cost of capital”, “real return”, and “discount rate”, all could be used interchangeably.

One can then arrive, he argued, at an estimate of what the proper discount rate should be by examining what the real return on investments had been. Nordhaus noted, for example, that the real, pre-tax, return on U.S. nonfinancial corporations over the previous four decades (recall that this book was published in 2008) was 6.6% per year on average, with the return over the shorter period of 1997 to 2006 equal to 8.9% per year. He also noted that the real return on 20-year U.S. Treasury securities in 2007 was 2.7%. And he said that he would “generally use a benchmark real return on capital of around 6 percent per year, based on estimates of rates of return from many studies” (page 170).

The specific discount rate used is important because of compound interest. While in the calculation of the SCC the discount rate is used to bring to the present (or more precisely, the year in which the CO2 is emitted) what the costs from damages would be in future years if an extra ton of CO2 is emitted today, it might be clearer first to consider this in the other direction – i.e. what an amount would grow to from the present day to some future year should that amount grow at the specified discount rate.

If the discount rate assumed is, say, 6.0% per annum, then $1 now would grow to $18.42 in 50 years. In 100 years, that $1 would grow to $339.30. These are huge. The basic argument Nordhaus makes is that one could invest such resources in the general economy today, earn a return such as this, and then in the future years use those resources to address the impacts of climate change and/or at that point then make the investments required to stop things from getting worse. One could, for example, build tall sea walls around our major cities to protect them from the higher sea level. If the cost to address the damages arising from climate change each year going forward is less than what one could earn by investing those funds in the general economy (rather than in reducing CO2 emissions), then – in this argument – it would be better to invest in the general economy. The discount rate (the real return on capital) defines the dividing line between those two alternatives. Thus by using that discount rate to discount the stream of year-by-year damages back to the present, one can determine how much society should be willing to pay for investments now that would avoid the damages arising from an extra ton of CO2 being emitted. The sum of that stream of values discounted at this rate is the SCC.

There are, however, a number of issues. One should note:

i) While it is argued that the real return on capital (or real rate of interest, or similar concept) should be used as an indication of what the discount rate should be, there are numerous different asset classes in which one can invest. It is not clear which should be used. It is generally taken that US equity markets have had a pre-tax return of between 6 and 7% over long periods of time (Nordhaus cites the figure of 6.6%), but one could also invest in highly rated US Treasury bonds (with a real return of perhaps 2 to 3%), or in essentially risk-free short-term US Treasury bills (with a real return on average of perhaps 0 to 1%). There are of course numerous other possible investments, including in corporate bonds, housing and land, and so on.

The returns vary for a reason. Volatility and risk are much higher in certain asset classes (such as equities) than in others (such as short-term Treasury bills). Such factors matter, and investors are only willing to invest in the more volatile and risky classes such as equities if they can expect to earn a higher return. But it is not clear from this material alone how one should take into account such factors when deciding what the appropriate comparator should be when considering the tradeoff between investments in reducing CO2 emissions and investments in “the general economy”. And even if one restricted the choices just to equity market returns, it is not clear which equity index to use. There are many.

ii) The returns on physical investments (the returns that would apply in the Nordhaus recommendation of investing in the general economy as opposed to making investments to reduce CO2 emissions) are also not the same thing as returns on investments in corporate equities. Corporations, when making physical investments, will fund those investments with a combination of equity capital and borrowed capital (e.g. corporate bonds, bank loans, and similar). The returns on the equity invested might well be high, but this is because that equity was leveraged with borrowed funds paying a more modest interest rate. Rather, if one believes we should focus on the returns on corporate investments, the comparison should be to the “weighted average cost of capital” (the weighted average cost of the capital invested in some project), not on returns on investments just in corporate equity.

iii) Even if a choice were made and past returns were observed, there is no guarantee that future returns would be similar. But it is the future returns that matter.

iv) This is also all US-centric. But the determination of the SCC is based on the global impacts, and hence the discount rate to use should be based not solely on how one might invest in the US but rather on what the returns might be on investments around the world. The US equity markets have performed exceptionally well over the past several decades, while the returns in other markets have in general been less. And some have been especially low. The Japan Nikkei Index, for example, is (as I write this) still 15% below the value it had reached in 1989, over a third of a century ago.